机构名称:

¥ 1.0

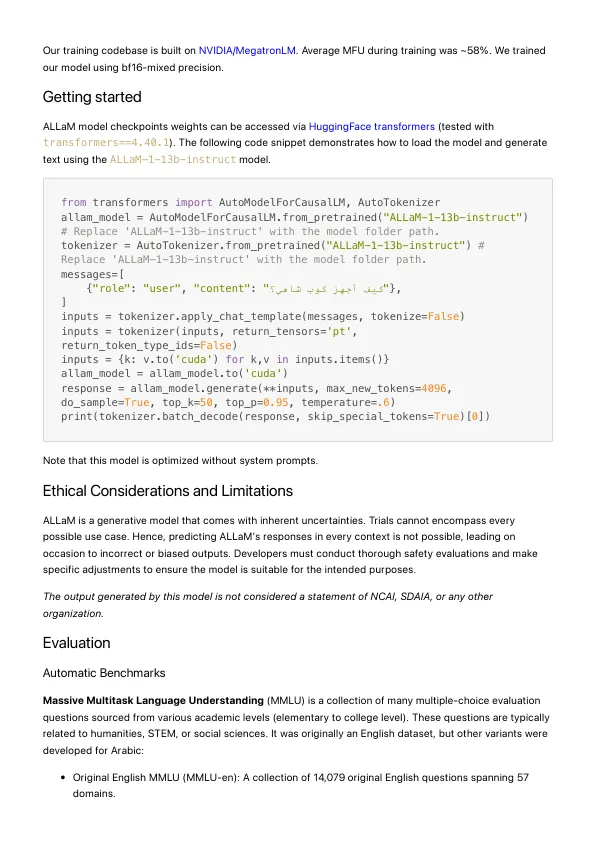

从变形金刚导入automodelforcausallm,autotokenizer allam_model = automodelforcausallm.from_pretrataining(“ allam-1-13b-instruct”)#用模型文件夹路径替换'Allam-1-13B-Instruct')tokenizer = autotokenizer.from_pretrataining(“ allam-1-13b-instruct”)#用模型文件夹路径替换'Allam-1-13b-Instruct'。messages = [{“角色”:“用户”,“ content”:“ toputs = tokenizer.apply_chat_template(消息,tokenize = false)inputs = tokenizer = tokenizer(inputs,return_tensors,return_tensors,return_tensors ='pt'pt'pt',rether_token_tef feldresssssss = kentossss = kento) )对于k,v in Inputs.items()} allam_model = allam_model.to('cuda')响应= allam_model.generate(** inputs,max_new_tokens = 4096,do_sample = true,true,true,true,true,top_k = 50,top_p = 50,top_p = 0.95,top_p = 0.95,温度=。 skip_special_tokens = true)[0])

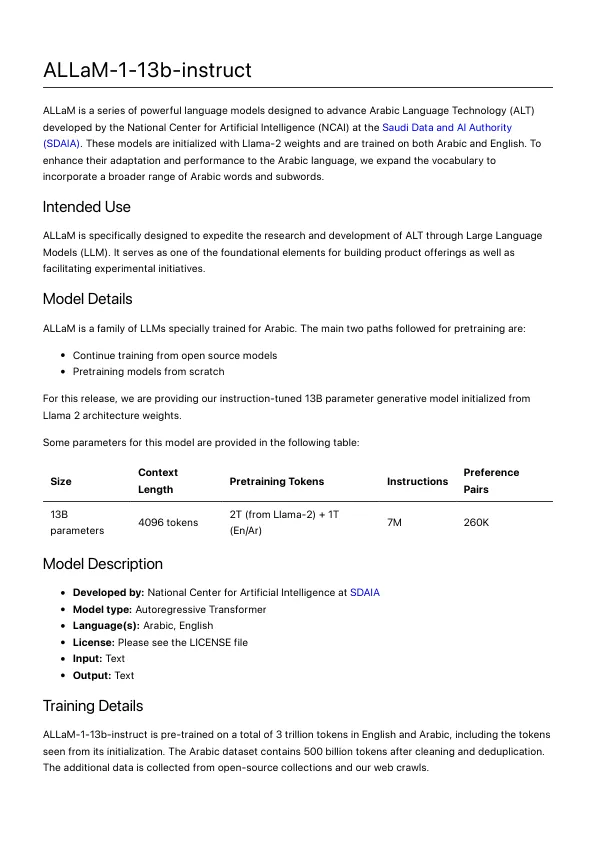

Allam-1-13b-Instruct

主要关键词