机构名称:

¥ 1.0

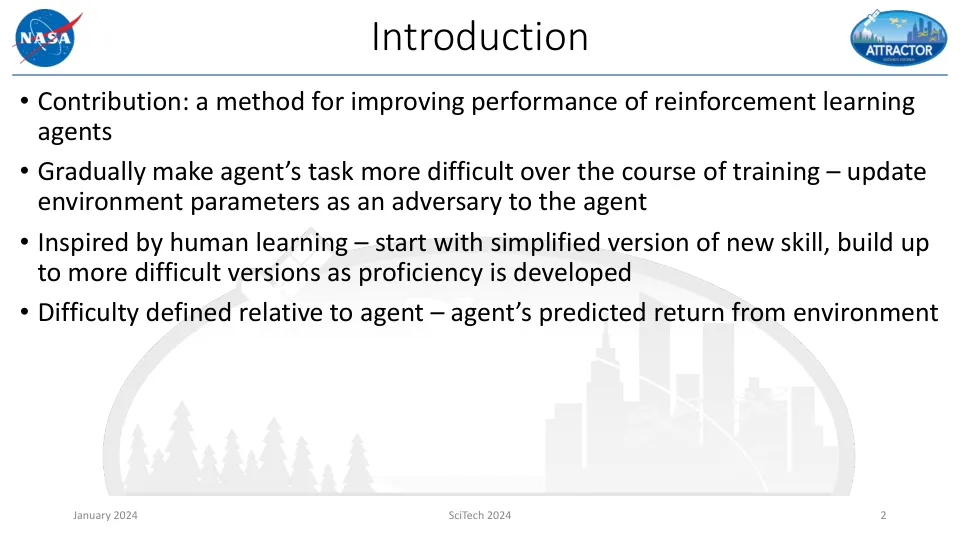

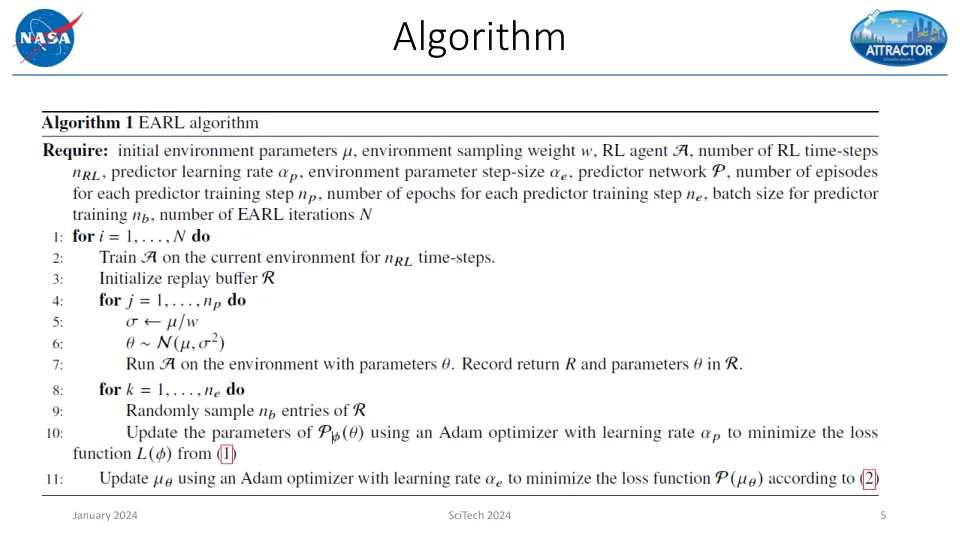

• Experimental results show performance increase compared to standard RL across all variations of training environment when using adversarial training • Gradient of performance predictor is effective for updating the environment in an adversarial manner • EARL could be used to learn policies for complicated tasks • Method presented for increasing difficulty, but decreasing difficulty is an open question • Future work will test EARL on more environments with other baseline RL algorithms for the inner-loop

环境对抗强化学习