机构名称:

¥ 4.0

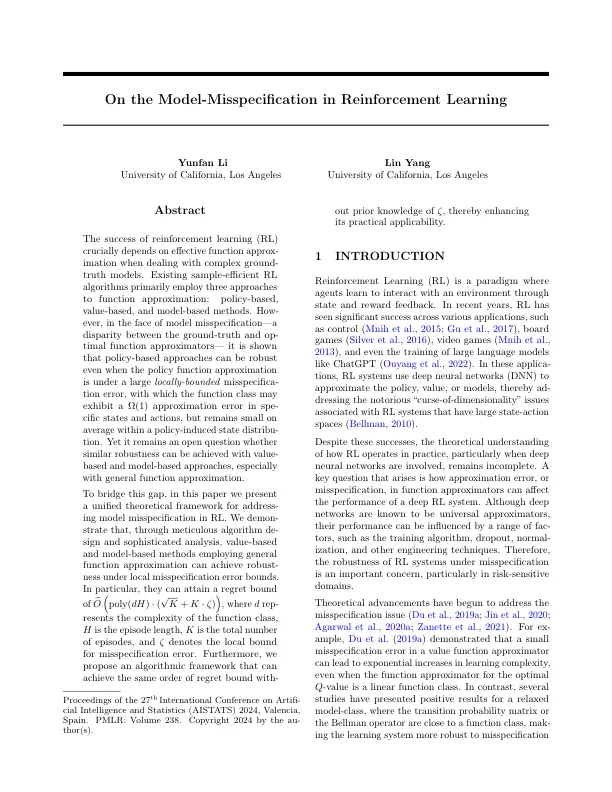

加固学习的成功(RL)至关重要地取决于有效的功能,在处理复杂的地面模型时。现有的样本效率RL算法主要采用三种方法来近似:基于策略,基于价值和基于模型的方法。How- ever, in the face of model misspecification—a disparity between the ground-truth and op- timal function approximators— it is shown that policy-based approaches can be robust even when the policy function approximation is under a large locally-bounded misspecifica- tion error, with which the function class may exhibit a Ω(1) approximation error in spe- cific states and actions, but remains small on average within a policy-induced state 分配。然而,是否可以通过基于价值和基于模型的方法来实现类似的鲁棒性,尤其是在常规函数近似中,这仍然是一个空旷的问题。

关于强化学习的模型三分法

主要关键词